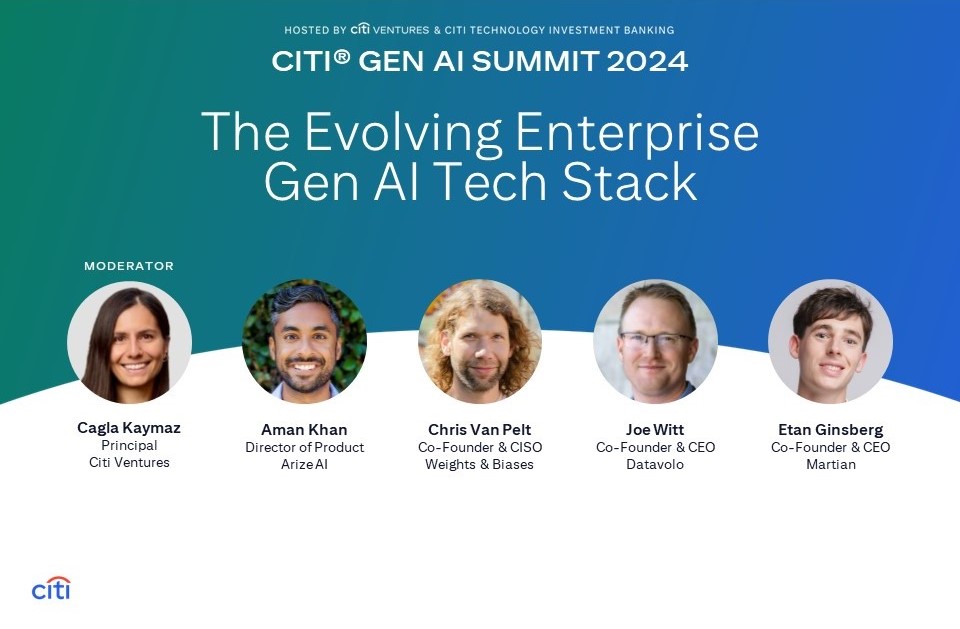

Citi® Gen AI Summit 2024 Takeaways: The Evolving Enterprise Gen AI Tech Stack

For the 2024 Citi® Gen AI Summit, I had the incredible pleasure of hosting a panel exploring the tools and approaches needed to get Gen AI solutions to production at enterprise scale. Joining me were four insightful, brilliant AI startup leaders: Aman Khan, Director of Product at Arize AI, an AI observability and evaluation platform; Chris Van Pelt, Co-Founder and CISO of Weights & Biases, an AI developer platform for building models and applications; Etan Ginsberg, Co-Founder and Co-CEO of Martian, which provides dynamic LLM routing solutions; and Joe Witt, Co-Founder and CEO of Datavolo, a data pipeline solution for AI systems and Citi Ventures portfolio company.

Together we delved into topics such as unstructured data pipelines; retrieval-augmented generation (RAG); model routing; LLM development and operations (LLMOps); and more.

Read on for some of my key takeaways from our talk.

Using unstructured data has become more important, and more complex, than ever

With most enterprise data existing in unstructured form (i.e., as emails, PDFs, audio and image files, and other content not easily organized into a database), the ability to derive business insights from unstructured data has become critical.

In order to do so, enterprises must build data pipelines to distribute that data to the models and applications that need it in a form they can use. This is a challenging enough task as it is, but doing so for Gen AI is especially complex as each RAG application uses a different ingestion/extraction process and a single piece of data may be piped to multiple applications. To solve for this, startups like Datavolo are building next-gen data pipelines that can extract, clean, standardize, enrich and distribute relevant information from unstructured data in a secure, simple and scalable manner.

Gen AI models are not one-size-fits-all

As enterprises have more Gen AI models to choose from than ever before, model routing is emerging as a compelling concept. It’s not entirely clear why certain models answer certain types of prompts with higher accuracy than others, but inserting a model routing layer like Martian’s into a Gen AI application can direct prompts to the right model for the job. This can vastly boost app performance and even help cut costs by leveraging smaller, cheaper models that can address the prompt as well as — or even better than — larger, more expensive ones.

Evaluating LLMs takes more than traditional DevOps approaches

Many engineers might be tempted to validate an LLM-based application just like they would an API built with deterministic code, but Gen AI is a whole new ballgame. Rather than running simple unit tests, enterprise engineering teams should implement rigorous LLM evaluation frameworks and put processes in place to both ensure they are building robust applications and smooth the transition as new models come out and prompts are tweaked. When they do so — given how large Gen AI systems are and how many dependencies they contain — it’s critical that they not only have metrics around what they’re optimizing for, but also have solutions in place to prevent unintended outputs.

To avoid hitting major roadblocks when building Gen AI systems, enterprises can actually use LLMs to help with LLM evaluations during production. LLMs are well suited to assess metrics such as how well another LLM follows a prompt, whether or not it uses data from the prompt correctly and the tone it takes in its output. Using LLM-based evaluation tools at various stages throughout the development process can help ensure the highest chance of success when building Gen AI applications.

Enterprise AI is evolving rapidly

It was a special opportunity to sit down with leaders in the enterprise AI space to discuss the best approaches to taking Gen AI solutions to the next level — and given the rapid evolution in the ecosystem, everyone agreed that many more exciting developments are soon to come.

Are you a founder building enterprise-grade Gen AI solutions? I’d love talk! Reach out to me at cagla.kaymaz@citi.com.